Configurable Embodied Data Generation for

Class-Agnostic RGB-D Video Segmentation

1University of Michigan

2Amazon Lab126

3National Tsing Hua University

Abstract

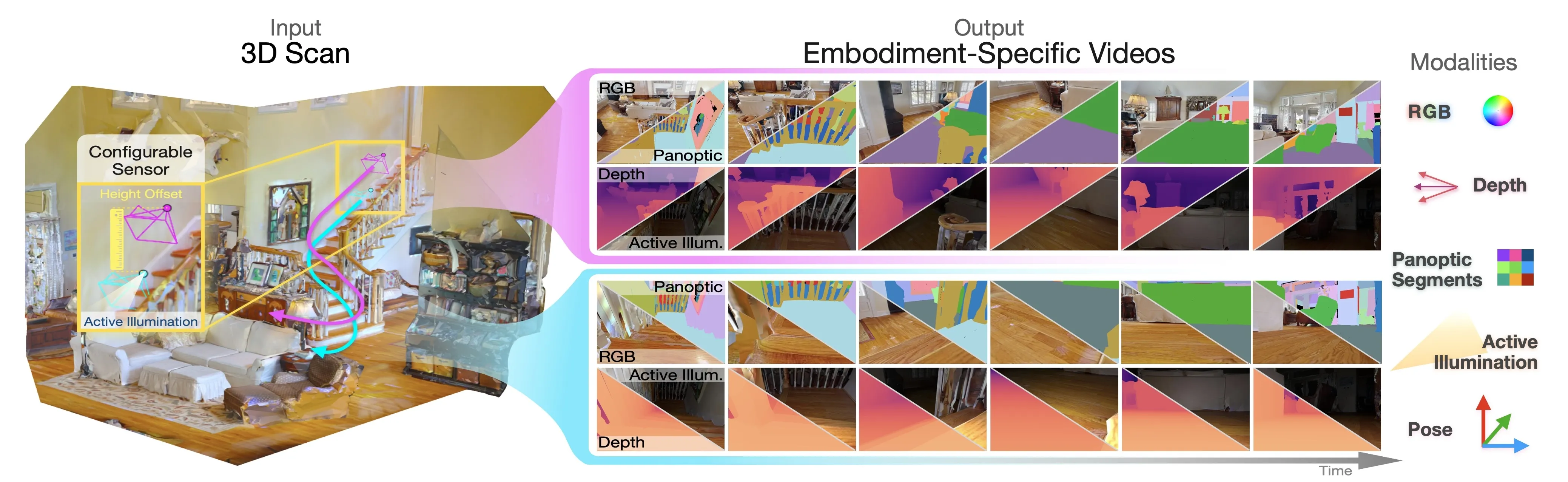

This paper presents a method for generating large-scale datasets to improve class-agnostic video segmentation across robots with different form factors. Specifically, we consider the question of whether video segmentation models trained on generic segmentation data could be more effective for particular robot platforms if robot embodiment is factored into the data generation process. To answer this question, a pipeline is formulated for using 3D reconstructions (e.g. from HM3DSem[Yadav et al. CVPR’23]) to generate segmented videos that are configurable based on a robot’s embodiment (e.g. sensor type, sensor placement, and illumination source). A resulting massive RGB-D video panoptic segmentation dataset (MVPd) is introduced for extensive benchmarking with foundation and video segmentation models, as well as to support embodiment-focused research in video segmentation. Our experimental findings demonstrate that using MVPd for finetuning can lead to performance improvements when transferring foundation models to certain robot embodiments, such as specific camera placements. These experiments also show that using 3D modalities (depth images and camera pose) can lead to improvements in video segmentation accuracy and consistency.

Massive Video Panoptic Dataset (MVPd)

MVPd is introduced to support research on embodied class-agnostic video instance segmentation and the potential for 3D modalities to benefit video segmentation algorithms. In total, MVPd contains 18,000 densely annotated RGB-D videos, 6,055,628 individual image frames with ground truth 6DoF pose, and 162,115,039 masks. Videos are rendered in simulation from scenes of HM3DSem, containing real-world building-scale domestic environments such as homes, offices, and retail spaces. Each video in MVPd contains between 100 and 600 image frames rendered at 640x480 resolution, and covers an average distance of 7.48m. Annotated segments are assigned to one of 40 Matterport categories[Chang et al. 3DV’17]. Example videos from MVPd are visualized below:

RGB Videos

Depth Videos

Panoptic Videos

Sensor Placement Control

The MVPd dataset controls for sensor placement to enable experiments that measure the impact of a robot’s embodiment on class-agnostic video instance segmentation models. For each trajectory within the MVPd dataset, an embodiment specification is set to generate one video where the robot’s camera sensor is placed 1m above the scene floor and a second video where the sensor is placed at 0.1m above the floor. These settings emulate the challenging perspectives taken by home robots of differing embodiments. Examples videos showcasing the sensor placement control data are visualized below:

Videos at 1m

Videos at 0.1m

MVPd Data Generation Pipeline

The key insights inspiring MVPd’s data generation pipeline are that (1) instance annotations at the mesh-level enable inexpensive segment annotations at the video-level and (2) the mesh representation for scenes enables embodiment-specific configuration for each video at scale. Based on these insights, the MVPd data generation pipeline is developed as a scalable solution for creating simulated video segmentation datasets that are customised to specific robot embodiments. By using 3D scene reconstructions (e.g. from HM3DSem[Yadav et al. CVPR’23]) of real-world, cluttered, domestic environments the MVPd data generation pipeline can control for robot embodiments in simulation while generating large-scale and densely annotated segmentation videos.

Illustration of the data generation pipeline used to create MVPd. Left: Using input meshes that contain RGB and segmentation textures, the pipeline generates sparse random paths with a collision-free NavMesh planner [Savva et al. ICCV'19] and interpolates them into dense trajectories of way poses. Right: The motion trajectories are refined according to an embodiment configuration file and rendered to output videos.

Illumination Control

In addition to controlling for a robot’s sensor placement, the MVPd data generation pipeline can also control for scene illumination source and strength. In particular, ambient and active scene illumination at varying strengths can be simulated, together with ground truth segment annotations, to use for controlled experiments or model fine-tuning. Notably under active illumination, the appearance of object textures changes as the robot moves. The example videos below illustrate trajectories from the MVPd dataset rendered with varying illumination source and strength (Low Power = 20Watts, High Power = 200Watts) by the data generation pipeline:

Ambient Illumination

Active Illumination High Power

Active Illumination Low Power

Citation

@article{opipari2024mvpd,

author={Opipari, Anthony and Krishnan, Aravindhan K and Gayaka, Shreekant and Sun, Min and Kuo, Cheng-Hao and Sen, Arnie and Jenkins, Odest Chadwicke},

journal={IEEE Robotics and Automation Letters},

title={Configurable Embodied Data Generation for Class-Agnostic RGB-D Video Segmentation},

year={2024},

doi={10.1109/LRA.2024.3486213}

}

article

article

Code

Code